This post is co-authored with Michael Bolton. We have spent hours arguing about nearly every sentence. We also thank Iain McCowatt for his rapid review and comments.

Testing and tool use are two things that have characterized humanity from its beginnings. (Not the only two things, of course, but certainly two of the several characterizing things.) But while testing is cerebral and largely intangible, tool use is out in the open. Tools encroach into every process they touch and tools change those processes. Hence, for at least a hundred or a thousand centuries the more philosophical among our kind have wondered “Did I do that or did the tool do that? Am I a warrior or just spear throwing platform? Am I a farmer or a plow pusher?” As Marshall McLuhan said “We shape our tools, and thereafter our tools shape us.”

This evolution can be an insidious process that challenges how we label ourselves and things around us. We may witness how industrialization changes cabinet craftsmen into cabinet factories, and that may tempt us to speak of the changing role of the cabinet maker, but the cabinet factory worker is certainly not a mutated cabinet craftsman. The cabinet craftsmen are still out there– fewer of them, true– nowhere near a factory, turning out expensive and well-made cabinets. The skilled cabineteer (I’m almost motivated enough to Google whether there is a special word for cabinet expert) is still in demand, to solve problems IKEA can’t solve. This situation exists in the fields of science and medicine, too. It exists everywhere: what are the implications of the evolution of tools on skilled human work? Anyone who seeks excellence in his craft must struggle with the appropriate role of tools.

Therefore, let’s not be surprised that testing, today, is a process that involves tools in many ways, and that this challenges the idea of a tester.

This has always been a problem– I’ve been working with and arguing over this since 1987, and the literature of it goes back at least to 1961– but something new has happened: large-scale mobile and distributed computing. Yes, this is new. I see this is the greatest challenge to testing as we know it since the advent of micro-computers. Why exactly is it a challenge? Because in addition to the complexity of products and platforms which has been growing steadily for decades, there now exists a vast marketplace for software products that are expected to be distributed and updated instantly.

We want to test a product very quickly. How do we do that? It’s tempting to say “Let’s make tools do it!” This puts enormous pressure on skilled software testers and those who craft tools for testers to use. Meanwhile, people who aren’t skilled software testers have visions of the industrialization of testing similar to those early cabinet factories. Yes, there have always been these pressures, to some degree. Now the drumbeat for “continuous deployment” has opened another front in that war.

We believe that skilled cognitive work is not factory work. That’s why it’s more important than ever to understand what testing is and how tools can support it.

Checking vs. Testing

For this reason, in the Rapid Software Testing methodology, we distinguish between aspects of the testing process that machines can do versus those that only skilled humans can do. We have done this linguistically by adapting the ordinary English word “checking” to refer to what tools can do. This is exactly parallel with the long established convention of distinguishing between “programming” and “compiling.” Programming is what human programmers do. Compiling is what a particular tool does for the programmer, even though what a compiler does might appear to be, technically, exactly what programmers do. Come to think of it, no one speaks of automated programming or manual programming. There is programming, and there is lots of other stuff done by tools. Once a tool is created to do that stuff, it is never called programming again.

Now that Michael and I have had over three years experience working with this distinction, we have sharpened our language even further, with updated definitions and a new distinction between human checking and machine checking.

First let’s look at testing and checking. Here are our proposed new definitions, which soon will replace the ones we’ve used for years (subject to review and comment by colleagues):

Testing is the process of evaluating a product by learning about it through experiencing, exploring, and experimenting, which includes to some degree: questioning, study, modeling, observation, inference, etc.

(A test is an encounter with a product that comprises an instance of testing.)

Checking is the mechanistic process of verifying propositions about the product.

(A check is an instance of checking.)

Explanatory notes:

- “evaluating” means making a value judgment; is it good? is it bad? pass? fail? how good? how bad? Anything like that.

- “learning” is the process of developing one’s mind. Only humans can learn in the fullest sense of the term as we are using it here, because we are referring to tacit as well as explicit knowledge.

- “exploration” implies that testing is inherently exploratory. All testing is exploratory to some degree, but may also be structured by scripted elements.

- “experimentation” implies interaction with a subject and observation of it as it is operating, but we are also referring to “thought experiments” that involve purely hypothetical interaction. By referring to experimentation, we are not denying or rejecting other kinds of learning; we are merely trying to express that experimentation is a practice that characterizes testing. It also implies that testing is congruent with science.

- the list of words in the testing definition are not exhaustive of everything that might be involved in testing, but represent the mental processes we think are most vital and characteristic.

- “mechanistic” means algorithmic; that it can be expressed explicitly in a way that a machine could perform.

- “verifying” means ascertaining the factual status of. Quality is an opinion, not a fact, so quality cannot be verified. But lots of things about a product and its behavior can be settled as a matter of fact.

- “propositions” are statements about a product that may be true or false.

There are certain implications of these definitions:

- Testing encompasses checking (if checking exists at all), whereas checking cannot encompass testing.

- Testing can exist without checking. A test can exist without a check. But checking is a very popular and important part of ordinary testing, even very informal testing.

- Checking is a process that can, in principle be performed by a tool instead of a human, whereas testing can only be supported by tools. Nevertheless, tools can be used for much more than checking.

- We are not saying that a check MUST be automated. But the defining feature of a check is that it can be COMPLETELY automated, whereas testing is intrinsically a human activity.

- Testing is an open-ended investigation– think “Sherlock Holmes”– whereas checking is short for “output checking” or “fact checking” and focuses on specific facts and rules related to those facts.

- Checking is not the same as confirming. Checks are often used in a confirmatory way (most typically during regression testing), but we can also imagine them used for disconfirmation or for speculative exploration (i.e. a set of automatically generated checks that randomly stomp through a vast space, looking for anything different).

- One common problem in our industry is that checking is confused with testing. Our purpose here is to reduce that confusion.

- A check is describable; a test might not be (that’s because, unlike a check, a test involves tacit knowledge).

- An assertion, in the Computer Science sense, is a kind of check. But not all checks are assertions, and even in the case of assertions, there may be code before the assertion which is part of the check, but not part of the assertion.

- These definitions are not moral judgments. We’re not saying that checking is an inherently bad thing to do. On the contrary, checking may be very important to do. We are asserting that for checking to be considered good, it must happen in the context of a competent testing process. Checking is a tactic of testing.

Whither Sapience?

If you follow our work, you know that we have made a big deal about sapience. A sapient process is one that requires an appropriately skilled human to perform. However, in several years of practicing with that label, we have found that it is nearly impossible to avoid giving the impression that a non-sapient process (i.e. one that does not require a human but could involve a very talented and skilled human nonetheless) is a stupid process for stupid people. That’s because the word sapience sounds like intelligence. Some of our colleagues have taken strong exception to our discussion of non-sapient processes based on that misunderstanding. We therefore feel it’s time to offer this particular term of art its gold watch and wish it well in its retirement.

Human Checking vs. Machine Checking

Although sapience is problematic as a label, we still need to distinguish between what humans can do and what tools can do. Hence, in addition to the basic distinction between checking and testing, we also distinguish between human checking and machine checking. This may seem a bit confusing at first, because checking is, by definition, something that can be done by machines. You could be forgiven for thinking that human checking is just the same as machine checking. But it isn’t. It can’t be.

In human checking, humans are attempting to follow an explicit algorithmic process. In the case of tools, however, the tools aren’t just following that process, they embody it. Humans cannot embody such an algorithm. Here’s a thought experiment to prove it: tell any human to follow a set of instructions. Get that person to agree. Now watch what happens if you make it impossible for that person ever to complete the instructions. Human beings will not just sit there until they die of thirst or exposure; they will stop themselves and change or exit the process. And that’s when you know for sure that this human– all along– was embodying more than just the process that he or she agreed to follow and tried to follow. There’s no getting around this if we are talking about people with ordinary, or even minimal cognitive capability. Whatever procedure humans appear to be following, they are always doing something else, too. Humans are constantly interpreting and adjusting their actions in ways that tools cannot. This is inevitable.

Humans can perform motivated actions; tools can only exhibit programmed behaviour (see Harry Collins and Martin Kusch’s brilliant book The Shape of Actions, for a full explanation of why this is so). The bottom line is: you can define a check easily enough, but a human will perform at least a little more during that check– and also less in some ways– than a tool programmed to execute the same algorithm.

Please understand, a robust role for tools in testing must be embraced. As we work toward a future of skilled, powerful, and efficient testing, this requires a careful attention to both the human side and the mechanical side of the testing equation. Tools can help us in many ways far beyond the automation of checks. But in this, they necessarily play a supporting role to skilled humans; and the unskilled use of tools may have terrible consequences.

You might also wonder why we don’t just call human checking “testing.” Well, we do. Bear in mind that all this is happening within the sphere of testing. Human checking is part of testing. However, we think when humans are explicitly trying to restrict their thinking to the confines of a check– even though they will fail to do that completely– it’s now a specific and restricted tactic of testing and not the whole activity of testing. It deserves a label of its own within testing.

With all of this in mind, and with the goal of clearing confusion, sharpening our perception, and promoting collaboration, recall our definition of checking:

Checking is the mechanical process of verifying propositions about the product.

From that, we have identified three kinds of checking:

Human checking is an attempted checking process wherein humans verify propositions without the mediation of tools.

Machine checking is a checking process wherein tools verifiy propositions without the mediation of humans.

Human/machine checking is an attempted checking process wherein both humans and tools cooperate to verify propositions about the product.

In order to explain this thoroughly, we will need to talk about specific examples. Look for those in an upcoming post.

Meanwhile, we invite you to comment on this.

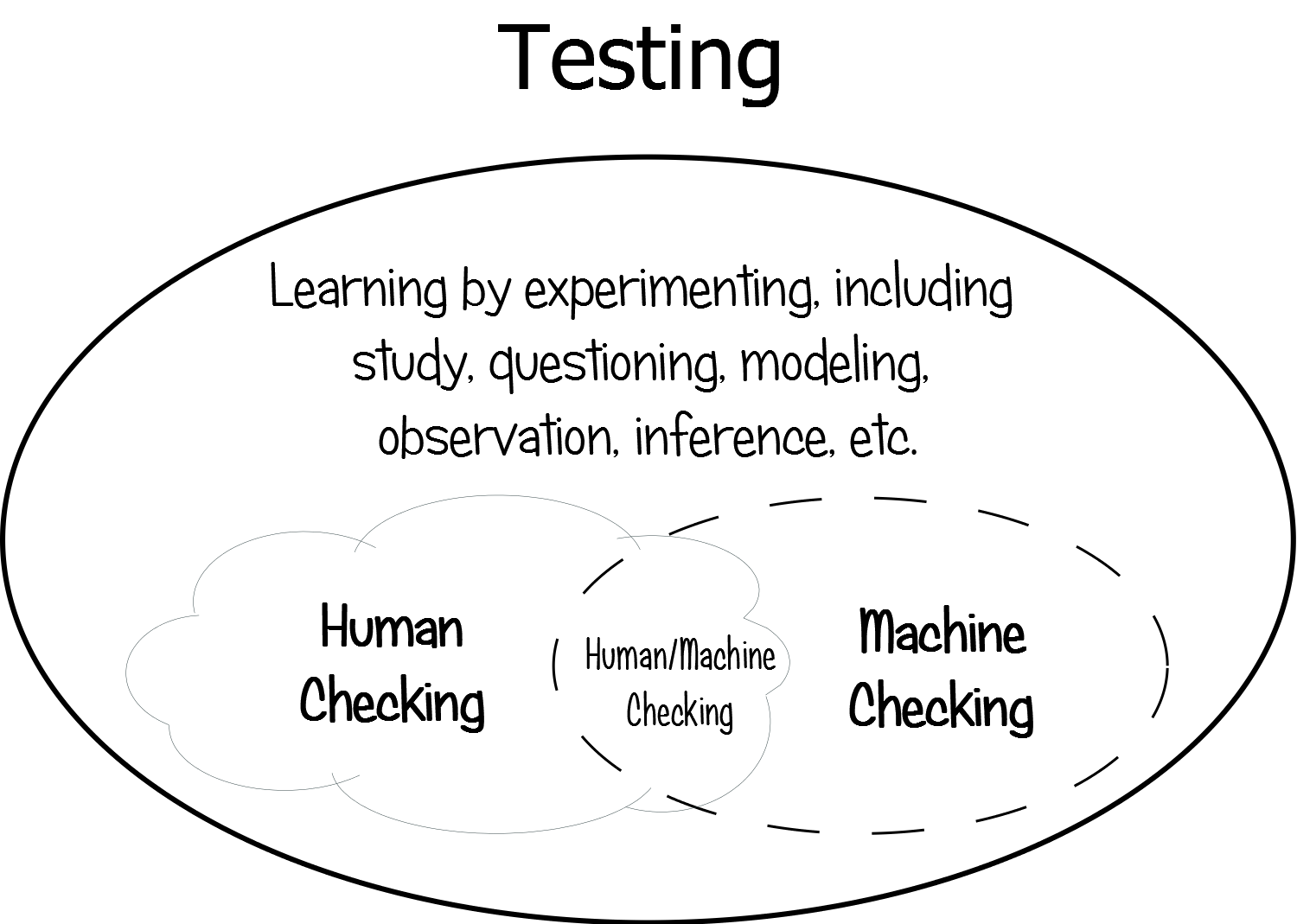

Update 2013-04-10: As a result of intense discussions at the SWET5 peer conference, I have updated the diagram of checking and testing. Notice that testing is now sitting outside the box, since it is describing the whole thing, a description of testing is inside of it. Human checking is characterized by a cloud, because its boundary with non-checking aspects of testing is not always clearly discernible. Machine checking is characterized by a precise dashed line, because although its boundary is clear, it is an optional activity. Technically, human checking is also optional, but it would be a strange test process indeed that didn’t include at least some human checking. I thank the attendees of SWET5 for helping me with this: Rikard Edgren, Martin Jansson, Henrik Andersson, Michael Albrecht, Simon Morley, and Micke Ulander.

Update, 2019-12-16: We have added “experiencing” to our definition of testing. We do this to emphasize the role of direct, interactive, human experience with the product, the system of which it is a part, and the context that affects it. Testers can and will use tools (including automated checks) in support of testing, but experience of the product is essential to evaluation and learning.

Update, 2024-08-10: We simplified the definition of checking. Actually did this a few years ago and forgot to update this article.

I like the fact that we are differentiating the capabilities of humans and tools.

I congruent with you on how humans get easily distracted from a set of steps to be followed for checking a functionality. Humans can’t do that on a daily bases.

To co-relate this to what we do regularly in our projects:

1. Unit tests and Integration tests are Machine checking, where you validate most of your functionality and business rules

2. Crazy/Monkey testing, handling edge cases in the UI is Human testing ?

[James’ Reply: I would say “unit tests” are not tests. Checking is not the same as testing. I don’t know what crazy/monkey testing is.]

Hi James, in your article you mentioned that “testing encompasses checking”.

[James’ Reply: Yes.]

In addition, your diagram shows that checking is inside testing. Now, you said that “checking is not the same as testing”. To me, that’s a little bit confusing.

[James’ Reply: Are you confused when I tell you that wheels are not cars? Leaves are not trees? Parts are not wholes? I bet you understand these examples just as well as I do. People keep thinking that output checking IS testing. They keep using the word testing for checking and in so doing devalue all the elements that make testing powerful. Then they pursue an impoverished process that is called testing (but really is mostly checking), thereby confusing any who look upon that and our craft about what it is we should be doing.]

This is like saying that the set of real numbers is inside the set of complex numbers, but real numbers are not the same as complex numbers. Now, all real numbers are complex numbers, but not all complex numbers are real numbers.

[James’ Reply: You are talking about a subset. I am not talking about a subset. Checking is not a kind of testing. Checking is a part of testing that exists only in the service of testing.

I’m talking about parts and wholes. Legs are not subsets of people, they are parts of people. Legs don’t have a functional existence independent of people.]

So, I would say that checking is testing, but not all testing is checking. In other words: Just as real numbers are a special form of complex numbers, checking is a special form of testing. To me, that’s a different statement than saying that “checking is not the same as testing”. Does that even make sense? 😉 Cheers, Ingo.

[James’ Reply: I’m afraid that does not make sense. Checking is not testing. If you are checking, and only checking, then you are not testing. You CANNOT be testing, because testing, as I explain in this post, is a larger, deeper, social process that guides and situates checking. If you are doing mere checking then you are merely operating a machine or merely simulating a machine.]

Understood. Thanks, Ingo.

Excellent! Thanks you two for clarifying this so nicely! I am a HUGE fan of Checking vs Testing. I will reference this post a LOT.

Cheers Oliver

I really like this development, and I’ll be clear as to why. I’ve long felt that automation of some form will always be important, and it won’t just ‘go away’.

[James’ Reply: I’ve never heard of anyone saying tools would go away… except GUI-based checks which often fall apart under their own weight.]

I’ve also felt, that while it may provide value, until we have the ability to have competent ‘AI’ to emulate human cognition, that computer checking, would remain as any other program has been for as long as I can remember.

[James’ Reply: Until? Look, when software can test itself, you won’t need to worry about testing at all, or development for that matter. Programs will write themselves before testing is automated.]

That being, I remember as a young child, watching some program or movie and someone was explaining that Computers only do what humans tell them to do. This is true, but what often gets missed, I think, is that humans do not always give the computer everything in these checks because there are boundaries in what can be checked by a machine, or trying to script that much would add significant delay in completion of these checks.

So we probably find, especially with the prevalence of agile thinking, that machine checking takes on a form that looks almost as ‘minimal viable’ as the code to which is also up to a ‘minimal viable’ standard. I think this is why we need human beings involved in Testing as an exploratory activity in addition to the machine only checking.

Interesting and detailed post. Thanks to you and MB, as always.

Perhaps you’ll explain this more in the future posts you mention but I’m stuck a little on this…

“Human checking is a checking process wherein humans collect the observations and apply the rules without the mediation of tools.”

…combined with this…

“And that’s when you know for sure that this human– all along– was embodying more than just the process he agreed to follow and tried to follow. There’s no getting around this if we are talking about people with ordinary, or even minimal cognitive capability. Whatever procedure humans appear to be following, they are always doing something else, too. Humans are constantly interpreting and adjusting their actions in ways that tools cannot. This is inevitable.”

As I understand it Human Checking is actually Testing…

“Testing is the process of evaluating a product by learning about it through experimentation, which includes to some degree: questioning, study, modeling, observation and inference.”

…or perhaps it’s not as it doesn’t meet every part of this definition? A human following a particular process will always be interpreting, observing, inferring(?), and most likely learning… even there is no tangible output from it.

Or am I just way off? There is a very good chance of that!

[James’ Reply: This is a good question. I have added this text to the post: “You might also wonder why we don’t just call human checking “testing.” Well, we do. Bear in mind that all this is happening within the sphere of testing. Human checking is part of testing. However, we think when a human is explicitly trying to restrict his thinking to the confines of a check– even though he will fail to do that completely– it’s now a specific and restricted tactic of testing and not the whole activity of testing. It deserves a label of its own within testing.”]

Thanks for the post on Checking vs Testing.

It was easily understandable – difference of checking and testing.

As programmers use compilers,editors such as Visual studio, then the programming is called “Automation programming”?

like wise,we use tools to test: QTP/Selenium – we call “Automation Testing”

I would like to know: What tools are there for machine checking?

[James’ Reply: Any programming language is a tool for machine checking.]

It may also be an interesting addition to your cabinet making analogy to consider that even in IKEA there are those skilled persons that design the cabinets to made in the factories, and they work with skilled ‘cabineteers’ who develop the design into functional prototypes prior to the design moving into factory production. These skilled craftsmen could be considered as the humans that design the algorithms that will be later used either as part of human and/or machine checking, in fact they would probably deciding what type of checking is appropriate.

I certainly agree with your premise that there should be no moral judgements on the importance of checking activities. These checking activities are considered just as important in my domain where software is safety critical (e.g. defence, aerospace, …). Having said that the humans involved in the process certainly enjoy testing activities ahead of human checking, and they value the contribution of machine checking to their daily job satisfaction state.

[James’ Reply: Good point. Thank you.]

I appreciate this post. It clarifies a lot.

Hi James

Thank you for this great post and helping to clarify the original post by Michael Bolton. Can you inform me if my own definitions now still apply?

In very simple terms I see checking as confirming what you think you already know about the system and testing as asking questions in which you do not know the answer.

regards

John

[James’ Reply: Your definitions are not consistent with ours, as far as I understand them. We are not saying that a check must confirm anything. It just applies a rule to an observation. This could be motivated by a confirmatory strategy, or something else.

Furthermore, strictly speaking you only perform a check when you are worried that you may not know the answer. Otherwise there is no point at all.

As a loose intuitive “serving suggestion” for checks versus tests and not a definition, however, I have not problem with what you said.]

James,

I like this article and I think that the distinction between testing and checking is an important one and have been using within my organisation now for some time (we use the term ‘assessment’ for the sapient testing activity)

I have, however, a concern over your definition of checking.

You say ‘we distinguish between aspects of the testing process that machines can do versus those that only skilled humans can do. We have done this linguistically by adapting the ordinary English word “checking” to refer to what tools can do.’

For me the use of tools and automation infrastructures is about more than checking. A critical role for tools is to gather information that is inefficient or impractical for a human to gather. We might then decide to apply checking against a subset of this information. One principle that I adopt in automation which I think is important to bear in mind when applying checks is to gather information, further to that required to furnish the check, to allow the appropriate re-assessment by a human tester should the check flag up an unexpected result. I worry that referring to tool use as ‘checking’ may place too much emphasis on the check at the expense of thinking about the other information that we might gather. Tools can be far more powerful if we approach them from the perspective, not of abstracting information behind checks, but of looking at what information they can tell us. In many cases I use tools without applying checks at all, but rather to gather the information that I need on the behaviour of a system during a recursive or parallelised operation which I could not achieve without the tool. Rather than referring to “checking” as “what tools can do”, I would suggest that checking is a subset of what tools can do, just as both are a subset of the greater activity of testing.

Thanks again for a though provoking post.

Adam.

[James’ Reply: Hi Adam. We did not restrict the role of tools to checking. We said “Please understand, a robust role for tools in testing must be embraced. As we work toward a future of skilled, powerful, and efficient testing, this requires a careful attention to both the human side and the mechanical side of the testing equation. Tools can help us in many ways far beyond the automation of checks. But in this, they necessarily play a supporting role to skilled humans; and the unskilled use of tools may have terrible consequences.”

Furthermore, even in checking, a human and tool can cooperate to perform a check. I just added an extra note in the implications about that, though. I hope it helps.]

Hi James,

Good to see some clarity spoken about this issue. I don’t know if it would have been easier to reclaim the word “sapience” by explaining its difference from “intelligence” but I see why they might be strongly linked in peoples’ minds.

Combining the definition of checking and human checking you have here would it be fair to say that it evaluates to this?:

“Human Checking is the process of making evaluations wherein humans apply algorithmic decision rules to specific observations of a product they have collected, all without the mediation of tools”

Because if so you’ve made the point I want to make yourself. Humans can’t apply algorithmic decision rules. There are tacit processes, interpretations and modelling that humans cannot stop performing even if they wanted to; so your definition of algorithmic (“can be expressed explicitly in a way that a computer could perform”) isn’t possible in theory; so the definition of human checking isn’t actually applicable to something a human is capable of doing. Could you expand on this point, if possible, please?

[James’ Reply: Yes, we say this in the post. A check performed by a human is, in the strictest terms, NOT a check. But in slightly broader terms, it is a human trying to perform a check. Perhaps we should add the word “attempt” in there somewhere. I’ve added it, but Michael may object. We’ll see.]

Would it not be correct to say that, while a “unit test” is “checking”, the process of designing a unit test is a sapient one?

[James’ reply: Yes. Although I usually remember to add the qualifier “good” as in, “a good unit check.” We can certainly can create bad unit checks by writing a program that automatically generates them, thereby non-sapiently creating them.]

I find that even though this topic on the surface appears to be quite simple it does prove difficult to explain to other testers the importance of understanding the difference. Often we assume that we understand the meaning of a word (definition) when attributed with context. However it is not until you take a step back and really look in to the details of what you believe the meaning of a word is that you start to realise that your preconceived ideas need to be adjusted.

Why is it difficult to explain and emphasise this difference? In my situation I was in the camp of “yeah of course I know the difference” and why wouldn’t I think that, as these two words are not particularly hard to understand. Now put this in the context of testing software and without redefined your understanding of what testing v’s checking means you’ll still not understand that there is a significant difference.

In my case, having difficulty explaining the difference (with clarity) between Testing and Checking, lies down to me believing I fully understand the difference, rather than in reality the fact being that I agree with it, of course, but I haven’t fully understood the need for a distinction.

Having been quizzed recently on what my understanding of a commonly used word is, it has shifted my thought process from thinking I know to questioning what I think I know.

[James’ Reply: We need to come up with a post to describe worked examples. That will help.]

I agree, designing “a good unit check” requires careful thought and is not something that be generated by a program. I would say that a “unit check” is an output of unit testing, where unit testing includes, but is not limited to, designing “good unit checks”. It follows that, outside of the process of unit testing, running “unit checks” is not to be considered testing. Does my understanding differ from yours?

[James’ Reply: Yes, this fits. Nice summary.

And as an example of checking outside the process of testing, imagine a set of checks constructed for the purpose of fooling people into thinking you had tested, but with no real intent to discover problems. This is more common than many believe.]

I understand machine checking as the thing that started to become “continuous deployment”, whereas “continuous integration” with lots of machine checks inform a human what to do next: green – investigate gaps in the checking strategy, red – investigate what is obviously to the machine broken.

I love the insights about human checking where a human will do some other things than the pure script in your previous terminology, and will miss some things from the scripts. I also love the drawing which details what I had in mind with the discussion around “automation vs. exploration – we got it wrong” (don’t want to put up the shameless plug here).

Thanks for sharing the inspiration.

Thank you James and Michael for this clarifying post. I must say that it was time for it.

Exactly two years ago I had been rolling the subject in my head for a very long time and discussing it with peers, mostly developers, and wrote this post.

http://happytesting.wordpress.com/2011/03/29/good-programmers-check-great-ones-test-as-well/

I would say that through the good discussions in the comments it quite well aligns with your post today. Yours having more structure to enable definitions to become more clear of course.

What i wanted back then, was to emphasise the needs for testing by programmers before even considering their development task at hand to be done. Testing their code for worthiness of being shown another person, as an addition to the checking they created.

A thing that I care the very much for, is the adaptation of ideas across cultural boundaries. Those are the times when ideas get their wings. That is what I was trying back then, getting the ideas going with programmers, but still there were too much unclear variables and too easy to shake the ideas off with a shrug. In this case the sapience word was one of those things as you already pointed out, but also that the concept seemed reasonable, but still does not seem to add any value.

[James’ Reply: Well speak for yourself. It adds value for me. But I don’t see how you would use the sapience concept for this. What would be the point? Sapience is about respecting the difference between people and machines. But that respect doesn’t magically create tests for you!]

So for the continuous journey of testing and checking, how would you see this idea get its wings out of testing and throughout software development? The refining blog post is a great start, but how do you see that this can unfold even further? Is this potentially one way towards better management of software projects? Or is this just another one of those keep-in-mind sort of ideas towards better development practices?

[James’ Reply: We hope that it helps people stop thinking that they’ve solved the testing problem just because they made some checks. I also hope that it leads to less waste of testing resources on the endless pursuit of automated checks.]

I would really like to see how this concept can do good within software as a whole.

James,

Thanks for finally ‘retiring’ the use of ‘Sapient’. I’ve always found that word to be a bit confusing for people and have preferred to use the term ‘Cognitive Thought’ (or Cognition) to basically state “Use your brain!” in regards to this line of work. Too many times we hear about the “monkey on the keyboard” view of Testing, which it is not. Testing is about ‘experimentation’ to prove or disprove a hypothesis. In our case for software it can be boiled down to does this do what I expect or not, and if not then why. This implies some type or level or a’priori knowledge. But if we do not have that knowledge then testing can be as you have stated before, a way to ‘learn’ about the behaviour of the subject being examined (the software or system).

And at times this learning and/or experimentation will involve the use of tools to AID in the process. And that is the key, tools are aids in our work. But the issue I’ve seen over the years is that people believe the tool is the solution, not a mechanism to get to the solution. As we all know sometimes the tool doesn’t help.

[James’ Reply: “cognitive thought” in no way means what we were trying to say with sapient. So, you have inadvertently demonstrated the problem. You aren’t complaining about the term or how we used it. You are complaining about something we actually never tried to do!

Anyway, I like all the rest of what you said. So thanks!]

I just sent this e-mail around to my group. “Mark” is our manager, I’m the senior engineer.

——–

There is an extremely valuable blog post by two software testing thinkers (James Bach and Michael Bolton) here:

https://www.satisfice.com/blog/archives/856

You know how I wave my hands and run off at the mouth about the philosophy of testing? An important part of what I’m trying to communicate is encapsulated in this post and the simple terminological change that they propose.

I would like for this to happen:

– Everyone in our group reads this post.

– We add “Discuss testing vs. checking” to our QA group meeting agenda until we’re all on the same page (or we discover that we agree to disagree).

– If we agree on these principles and this “Testing vs. checking” terminology:

o We publish this blog post (or a summary of it) to the company with the comment that we are now going to be following this terminology.

o We start using this terminology ALL THE TIME and we actively and consistently expect others to do the same.

– Else:

o We cherish the fact that we’ve had some good discussions and we leave it at that.

I would, of course, prefer that the “If” there evaluates to “true”!

Ten or twenty years from now, people will be saying “you know, there was that time that people differentiated ‘testing’ from ‘checking’, and it revolutionized how people think about QA. Wasn’t that great?” …and we have the chance to lead or follow.

By the way, Mark just read this over my shoulder, and he basically agrees, and says we should prepare to talk about this at next week’s (i.e. not tomorrow’s) QA group meeting. Please read and think about this blog post between now and then. I will try to remember to send out a reminder e-mail, say next Monday.

J. Michael Hammond

Principal QA Engineer

Attivio – Active Intelligence

Hi James,

I really like this distinction between human, machine and human/machine checking as a way of avoiding talk about ‘sapience’. The split, to me, is intuitive because, as I try to explain in a (pending moderation) reply to Rahul over at http://www.testingperspective.com/?p=3143, our human wisdom gets in the way of us being able to perform a raw check of an algorithm in the way a machine can. I think this split helps us get away from the idea that a ‘check’ has to be automated as well which is good – though I freely accept that many checks are good candidates for automation.

Regards,

Stephen

I do think tools can only check. This is due to the way an assertion is made. A computer uses things like =, !=, >, <,… to evaluate. As long as a computer cannot make an observation not directly related to the actual evaluation it is only ever checking. Yes, tools can aid testing but they will still only be checking (not talking about things like data creation or similar here) and yes, there are checks only tools can actually viably do. But fact remains this will always be checking.

There are analog computers and neural networks, where this premise might be proven wrong but these I doubt are in common use by testers or anyone for that matter.

Another thing I observed when checking myself, if I manually follow a test script that contains specific checks, it actually forces me at some point to just do an assertion. It is usually the last thing I do. Before that I do lots of other (non scripted) things. So checking can be used to focus back to something that might be important but it also shows, how bad I am to actually doing checking.

So, as indicated above maybe humans are actually bad at or plain can't do checking (reliably) and that's why we seek out tools for those tasks.

[James’ Reply: If you look at the definition of testing and checking, it is clear that tools CAN do things other than checking. Lots of things. An infinite number of things. A check is a specific kind of thing. But for example: if you use a tool construct test data, that’s not a check.]

Congratulations to authors for presenting the testing/checking aspect thoroughly.

The human factor mainly comes into picture (in my opinion), the order in which test cases are executed. I have observed human testers extremely fast and efficient in determining “that” particular order of ,executing tests which will pick the bugs within a short time. Hence humans are more efficient in tests around a functionality change. However machines are real blessing for the regression type of checking.

In order to use machines for more functionality checking, definitely we need improvements in automation tools

In programming there’s a widespread and longstanding concept of assertions: http://en.wikipedia.org/wiki/Assertion_(computing)

A common, if not the most common, application of this today is for test assertions: http://en.wikipedia.org/wiki/Test_assertion

How does checks & checking, as defined here, differ from assertions & asserting as commonly understood in software development i.e. What difference do you see between asserting something and checking something?

Where do assertions fit into the testing process presented here?

[James’ Reply: Look at the definitions in the post and tell me what you think.]

They strike me as synonymous.

[James’ Reply: Andrew, you can do better than that. They are obviously not synonymous. However, an assertion– properly used according to your first citation– is a kind of machine check. So you have that part right. Unit checks probably use assertions in them, too.

They are not synonymous because a check can be more than an assertion. Human checks are obviously much more, unless you are directly equating a human with a Turing Machine. But even machine checks include the process of gathering the observations, not just the application of the decision rule. Furthermore you might have decision rules that are more complicated than simple assertions. In short, there might be a lot of code associated with a machine check, and no programmer would gesture at my 3,000 lines of Perl which does statistical analysis of thousands of data points and say “that’s an assertion” or even “that’s a whole pile of assertions.” For one thing, there are no actual assertion statements anywhere in it that relate to the product I’m checking. It would fit the definition of human/machine checking, however.

Thank you for raising the question, though. I have added a bit of text to the implications section to talk about assertions.

]

I was reading (testing) this article and came across “The bottom line is: you can define a check easily enough” and realised I hadn’t seen “check” defined or previously used. I then went back and “checked” that I hadn’t missed the definition…

From the checking definition I can assume:

Check: An output of an algorithmic decision rule.

Is that correct?

[James’ Reply: Sorry I meant to include that and forgot. I will add it. A check is “an instance of checking.” Nice and simple. If you need something more specific for some reason, then I would say “a check is the the smallest block of activity that exemplifies all aspects of checking.” By this definition, a check must result in an artifact of some kind; a string of bits.]

Then… on the “checking” definition I started thinking about “algorithmic” – this is typically interpreted as a finite number of steps, but doesn’t have to be – there could be randomized algorithms – where the behaviour/result might not be deterministic or las vegas algorithms – which will produce a result but are not necessarily time bounded. All of this might be valid for a check, but I was trying to construct a simpler form/definition without algorithmic – either by replacing algorithmic with deterministic or dropping algorithmic. Thoughts?

[James’ Reply: By algorithmic we mean that it can be represented as an algorithm– in other words that it can be performed by a computing device. We could have said “computable.” Why would you want to drop it, man? You can have an algorithmic process that is not deterministic or that involves random variables.]

On to implications.. As testing encompasses checking I think it’s also important to highlight that “good” or “excellent” checking implies “testing” -> ie the decision to use a check or to do some checking and / or the analysis of those checks and the checking is back in the testing domain (to determine how/what to do next). Making a check or doing some checking without caring about the result would be wasteful – although I’m sure it happens…

[James’ Reply: Checking is a tactic of testing, yes.]

Without this I see a risk that checks/checking in some way form their own “activity” -> someone thinking that we don’t have time for testing, so we’ll do some checking instead (which might be motivated and justified) -> then this becomes a standard/common practice… So, I want the checking justified (as much as the testing) – based on the value of the information we hope to learn from them. Thoughts?

[James’ Reply: You can definitely do checking inside of a messed up and attenuated testing process. That can be okay if the product happens to be great, or if the customers are forgiving.]

I like what I read so far, but I need to do some more thinking / analysis of the above too.

I’ve just read this post and completely agree with the idea (metaphors, concepts, terminology, definitions, etc.). Thus far, I’ve nothing to add. Well done, and thank you!

So……now what? That is, “What do I do with all this information?”

[James’ Reply: Use it as a rhetorical scalpel. Talk about having a checking strategy within your testing strategy, and keep those things distinct. Don’t let talk about checking drown out talk about testing. Be ready to give examples of testing without checking.

In short, use this information to protect testing.]

I figure that I need to “sell” the idea, and then “apply” the idea.

I assume that, in order to sell it, I have to find various “carrots”. I need to find the motivating factor(s) and benefit(s) for each person to accept this new idea. Do you have any suggestions?

[James’ Reply: The principal carrot is that we can protect the business by avoiding obsession with things that don’t provide much business value.]

If I can sell the idea and get everyone speaking the same language, then I have to apply the idea. I need to find practical ways that this idea can be implemented. Any ideas?

[James’ Reply: What do you think it means to “implement” this idea?]

I realize that perhaps these questions aren’t specific to this idea. Perhaps these questions have more general answers. Perhaps what I’m really asking is, “How do I sell and apply *any* new idea (…such as human/machine checking vs. testing)?”

[James’ Reply: Selling an effective approach to testing must begin with you understanding and practicing testing well. Knowing what can and can’t be done by a machines is part of that.]

A future post promises specific examples to help explain this idea thoroughly. I look forward to that post! However, if there IS any specific guidance around what to do with this info, I’ve love to see it included, as well.

Thanks!

I’ll be re-reading this a lot in the future. Thanks for what you do to improve the craft.

It is indeed an interested topic. What I would like to share may be a bit off topic because I am totally fine with your definitions about testing and checking but how and when to apply them in software projects matters.

The definition of testing reminded me of some bitter experiences in previous projects. Users quickly discussed and signed the specification in a highly multi-tasking manner (i.e. not focused). But when users were asked to accept the system we have built, they would seriously put down all their jobs on hand and start “testing”, while we only expected them to do “checking” against the signoff specification. After usually prolonged evaluating, learning, experimenting, questioning, studying, modeling, observing and inferencing, they decided that they now understood what they really need and requested us to build something quite different. Project delay, scope creep, finger-pointing… Why the cabineteers won’t suffer from “testing”?

Skillful cabineteers are everywhere in Hong Kong because they can magically fit all the furniture into a less than 400 square feet houses (usually a room in your standard). Here is a typical sample found from a public blog: http://xaa.xanga.com/9c9f7a6670134249809544/o198225488.jpg. Note that the cabineteers won’t just let us confirm the drawing and then start crafting. They put in all the measurements in inches with color codes. Because of the size of our houses, a quarter of an inch is enough to invalidate the whole design. We therefore do the “testing” up-front — evaluating, learning, experimenting, questioning, studying, modeling, observing, inferencing and obtain each and every measurement. We agree with the cabineteers that these are the “algorithmic decision rules” when “checking” the cabinet upon delivery.

Basically, this is Acceptance Test Driven Development (ATDD) or Behavior Driven Development (BDD) we have been practicing in recent years – First Testing, then development and finally (automated) checking.

Traditional specification writing is not a good collaboration tool but “testing”, as you have defined it, is. “Testing” magically bridges the communication gap between users and developers. The result is close to zero misunderstanding of what to build and all we need is “checking” and not another round of “testing” upon delivery. (We still have UX testing but it is only the presentation stuff)

Although the above is not totally relevant, I hope that I have looked at testing and checking from a different angle.

[James’ Reply: I’m concerned at the extremely mechanistic view of testing that you seem to have. Not just as you express it here, but on your blog. Your post about the “new V-model” is a love letter to the cause of standardizing without first learning; defining artifacts before exploring the implications of the product. Your references are to Factory School advocates.

Only in a very simple kind of project would I use checking as a principal tactic for testing.

What is so difficult about the concept of developing skills? How come that plays no part in your narrative? The first thing I would do if I had to work under the regime you describe is to break all the rules and reinvent the process.]

James, you misunderstand. It’s your stated definition of checking that strikes me as synonymous with asserting, not what you actually think checking is.

[James’ Reply: If that’s what you wanted to complain about, then it would have saved some time to have said so up front. Now that you have said it, I can reply to that concern. Here’s my reply: That’s not a bug; it’s a feature.]

Obviously, checking, if it “includes the process of gathering the observations, not just the application of the decision rule”, is not synonymous with asserting. However, “the process of gathering the observations” is absent from your definition of checking (“the process of making evaluations by applying algorithmic decision rules to specific observations of a product.”) i.e. your definition implies gathering observations can occur prior to and separate from checking.

[James’ Reply: The art of writing a definition includes choices about what to lock down tight and what to leave loose. We build plasticity into our definitions in order to help them be applied powerfully and without much effort. If we made it explicit that the entire process of observation is included in checking, then people would say that an assertion is NOT a check, but only part of a check. We would also have a definition that is more complicated, harder to remember or quote, and harder to apply.

It’s fair for you to say that an assertion is a check. That fits a reasonable interpretation of the words we used. However, we feel it is also a reasonable interpretation to say that “specific observations” refers not merely to the results of the observation, but also observation process. In other words, one reasonable expansion of “specific observations” is “a specific string of bits that results from an algorithmic process of interacting with and observing the product.”

We don’t care if someone chooses to interpret this more narrowly. By doing so, he is either assigning the observation process to testing in general, or to something else, I guess. But what he can’t say is that it’s wrong for OTHER people to interpret it more broadly and– we think– more powerfully.]

My point is your definition needs sharpening, else it will be misunderstood, and the last thing the discussion around automation needs is even more misunderstanding.

[James’ Reply: We will monitor the issue and adjust our definition further if we feel that there’s any widespread problem with this. Meanwhile, anyone is free to define these words in their own way. We’re not a law-making body. We are merely stating the protocol used in the Rapid Software Testing methodology. And we are sharing our ideas in case other people care what we think… and some people do.]

PS You should post your code on BitBucket or GitHub so people can see what you’re talking about.

[James’ Reply: What I’m talking about is pretty simple to explain, and only the concept matters anyway. Also, I’m not at liberty to release it under creative commons license.]

Interesting post. I wonder if in the follow up post, you might also add a little more context on why you felt it important to draw this distinction so clearly. During my first read, the post sounded like the proverbial cabinet maker complaining about factory made cabinets. Though, on second pass & your responses to the comments, it sounds more like you see an over reliance on “checking” in industry. What are you seeing in practice and how did that influence the need for these definitions?

Thanks,

John

[James’ Reply: the blog at Developsense.com covers that in more depth. See:

http://www.developsense.com/blog/2013/03/versus-opposite/

http://www.developsense.com/blog/2013/03/testing-and-checking-redefined/

http://www.developsense.com/blog/2011/12/why-checking-is-not-enough/

http://www.developsense.com/blog/2009/11/merely-checking-or-merely-testing/

http://www.developsense.com/blog/2009/11/testing-checking-and-convincing-boss-to/

http://www.developsense.com/blog/2009/09/context-probing-questions-for-testing/

http://www.developsense.com/blog/2009/09/tests-vs-checks-motive-for/

This isn’t even all of them! But it’s the main body.]

Thank you both for the refinement of checking.

Trackback & updated blog post on “testing AND checking”

http://jlottosen.wordpress.com/2012/04/02/testing-and-checking/

[What do you think it means to “implement” this idea?]

Perhaps getting “everyone speaking the same language” *is* the point. If you can “talk” right, then it can help you “do” right, too.

Edited to add to my previous reply:

I said: Perhaps getting “everyone speaking the same language” *is* the point. Reading (part of) your response to Dale Emery (on Michael Bolton’s blog), I see that I’m pretty much right:

Me: “Now what…do I do with this information?”

You: “install linguistic guardrails to help prevent people from casually driving off a certain semantic cliff” that results in “software that has been negligently tested”, and ultimately “improve the crafts of software testing”.

Further comments later on but, just as a quick note:

As pointed out by a friend, the term “automatic programming” is used in the context of artificial intelligence and refers exactly to what the name suggests: code that writes new code.

That might not be a big issue here but I thought it’s still worth mentioning, for your consideration.

[James Reply: Yes, and “artificial intelligence” is ANOTHER thing that does not exist.]

On algorithmic – I’m very happy that it is used if it’s meaning is not restricted to finite or deterministic steps. That was the aspect I wanted an answer to.

I’ve used non-deterministic checks in the past – for instance splitting up logfiles on time or size (whichever threshold triggers first) and parsing for certain keywords.

James,

Thank you for the pointers on your (and Michael’s) motivations, and for the time to write these thought provoking posts.

Thanks,

John

Highly appreciated blog! It poses basic questions and clearly comes from a heartfelt risk in current developments: that of losing test intelligence in the face of technical abilities. The challenge now is: how to maintain, rediscover or implant testing intelligence?

My intuition on this, (“testing intelligence”) would be that it is a mental faculty of judgement: the ability to interpret and distill quality criteria (1) and apply these to objects under test (2). These 2 judgemental abilities are easily taken for granted: we simply rely on the documents we have (judgement 1) and rely on the test techniques or tools to perform actions on the test objects whereby we make simpel comparisons between expected and real results (judgement 2).

Advanced tools and their current degree of usability have a darkening effect in that they blur the need for critical judgements as a part of testing. Tools can do more and more work for us, we forget to do the most important parts ourselves.

I think it is desirable that authorities, like the authors of this blog, elaborate these topics and come up with more suggestions on how to recognize “testing forgettenness in the face of mere checking” and how to define testing intelligence and how to develop it.

[James’ Reply: The development of testing skill and judgment is our principal professional focus. You could say that nearly every blog post we make tries to get at that subject.]

I really like when ideas are maturing and grows into even better things. 🙂

The Testing vs. Checking series were in more than one way revolutionary, this since they put words on some things many people felt were wrong.

I believe I have read and thought through the concepts a lot, and at the time I wrote a blog post about the Testing vs. Checking Paradox (http://thetesteye.com/blog/2010/03/the-testing-vs-checking-paradox/).

I eventually closed that post by saying I was wrong in my assumption, but now that I see this post I am not so sure anymore.

Maybe I was touching the concept of Human Checking?

The more you know about something, the more you only need to check against your assumptions. I.e. this is nothing that can be done by a machine, since a machine cannot know what I know – and they cannot know what I assume in a specific context.

But when I test, I do a mix of learning and checking (personal) assumptions (in a given context).

What do you think about adding the type of oracles into the definitions?

Machine Checking requires one specified oracle; Human Checking requires one or more oracles with a intelligent judgment whether which oracle is good enough?

[James’ Reply: I don’t think I accept your premise that the more you know something, the more you only need to check. If you “know,” why do you even need to check?

If you don’t know, and you want to know, then you need to find out. That requires testing. If you have a stable and trusted model of the world and the product, then it may be that you are comfortable with a checking strategy to serve as your test strategy. In other words, you test strategy may be dominated by the tactic of checking.

Machines don’t check against assumptions. They don’t know what assumptions are. Checks are designed by humans who make many assumptions, however. I don’t see the point of counting oracles, either. Whether you want to call it one oracle or many is subjective.

During human checking, the human attempts to follow the specified check procedure. However the human will bring additional oracles to bear. That is technically outside the scope of the check, but informally is part of it. Thus, there is no need to make that explicit in the definition.]

Hi James,

I am really delighted to have received your reply. To make our discussion fruitful maybe I should describe more about the context in which I give my narrative.

First, I take development skill for granted because it is a must anyway. In short, we follow Uncle Bob’s S.O.L.I.D design principles and Eric Evans’ DDD. For many years we believe we are “doing the thing right” but are not “doing the right thing”. Very often, we and users have different interpretations of the same specification. To us, doing the thing right is relatively easier than doing the right thing.

[James’ Reply: Skill cannot be taken for granted. Skill is developed through struggle and study. Furthermore, you have not said anything about testing skill. And the people you cite are not testers.]

Secondly, yes, my projects may have fallen into your simple category. My projects in recent years were mostly 10 to 12 iterations of 4-weeks each, involving two teams of 6 people each. For the current one to be deployed in June this year, users expressed in a review that it was the first time they “grow” specifications with “testing” as the collaboration tool. In describing how to test a feature, they evaluate, learn, experiment, question, study, model, observe & inference. Management is especially impressed by the “Specification PLUS test cases” approach, as these are their living documentation.

[James’ Reply: “Test cases” are not testing. Learning is not captured in test cases. Experiments are not even captured by them. Questioning is definitely not… etc.]

Michael Feathers once said that legacy code is code without tests. Test cases inside the specifications are readily executable (both manually & automatic). You know for sure if it is better or worse after checking in a change.

[James’ Reply: Michael Feathers is not a tester. He doesn’t study testing. What he says about coding is worth listening to, but what he says about testing is at best dubious.

Testing never results in knowing anything important “for sure.”]

Of course, the iterative approach also contributes to users’ satisfaction as they “grow” the final product together with us. By playing around with completed features, they understand more and more about what they actually want and provide more realistic requirements ahead. But to realize the benefit of iterative approach, automated “checking” against test cases inside the feature specifications is mandatory.

[James’ Reply: Mandatory? Obviously that is not true. I have a lot of experience with iterative development in commercial projects, and I do relatively little automated checking. Automated checking can be helpful or it can be a huge useless time sink. You have to be careful with it.]

Requiring users to redo “testing” against completed features is definitely out of question.

[James’ Reply: I don’t require users to do any testing, ever. Why is that even an issue?]

You know, no matter what development methods we are practicing, there are always some blind spots that can only be pointed out by independent outsiders and the most effective way is to find reputable persons with opposite opinions and ask them questions. So, by your definition of “testing”, do you mean that we have to build a prototype first? We cannot perform “testing” against a feature specification on paper?

[James’ Reply: If you read my definition you see that it can be applied to an idea, just as it is possible to test a physical product. However, the nature of that super-early testing is quite different and in some ways it is obviously limited. There are things you will only discover when you build the real product.]

If that is the case, are there any ways to reduce the “waste” of throwing away the prototype or making many changes to the original design and avoid introducing bugs when making these changes?

[James’ Reply: Why do you choose to call the learning process a waste? It’s just learning, man.]

I understand that custom build software is much more complicated than custom build cabinets but am I too naïve to apply the same principle? We won’t expect the cabineteers to build us a prototype for “testing” before we confirm the final design. We would imagine how the cabinet, the drawing on paper, fits into my 400 square feet house and give measurements (test cases) down to quarter of an inch. For example, we would model the cabinet door and when widely opened, should leave at least half an inch before touching the sofa.

[James’ Reply: Cabinets are many orders of magnitude less complex than software. Come on, man. The two situations cannot be equated.]

Sigh…just when I was beginning to finally feel comfortable speaking precisely about “testing” and “checking”, you two had to refine it! Fair enough. I know it’s all for the best. Two questions:

What was the problem with Michael’s original “check” definition (an observation, linked to a decision rule, resulting in a bit)? I loved that definition. People always nodded their heads in understanding when I repeated it.

[James’ Reply: Actually, I wrote that original definition (except for the bit part, which Michael added). That definition is a subset of the new one. Whatever cases fit that definition will reasonably fit the new one. With the new one, we wanted to emphasize that the decision rule is algorithmic (i.e. computable; amenable to automation) and that the observation is specific (i.e. expressible as a string, rather than some tacit impression). We wanted to allow that the evaluation might result in more than a bit as a result. We also wanted to distinguish between human and machine checking, so we generalized the definition a little bit in order to afford sub-classing.]

Can you explain the distinction between “Automated Testing” and “Automated Checking” ? I have been attempting to replace the former with the latter but now I’m thinking they can coexist.

[James’ Reply: There is no such thing as automated testing. Testing cannot be automated. Testing can be supported with tools, of course, just as scientists use tools to help their work. Automated checking is a synonym for “machine checking” or possibly “human/machine checking.”]

BTW – I love the cabinet maker metaphor. My brother is a professional cabinet maker. I don’t have the name you were looking for to describe an expert cabinet maker. However, their work is referred to as “museum quality” furniture. I’m doubtful my brother’s skill could ever be automated. He can build an entire cabinet to follow the slight curve of a board that came from a bent tree.

[James’ Reply: Cool!]

My reply got too long, so I wrote it on my blog:

http://ortask.com/testing-vs-checking-tomato-vs-tomato

Thanks.

Mario G.

ortask.com

[James’ Reply: Wow, your reply is a beautiful example of the Dunning-Krueger effect, assimilation bias, and the incommensurability of opposing paradigms. It’s a Factory School idea that’s been put in a bunker and allowed to inbreed for 40 years, untrammeled by the developments in our craft.

You are like a newly discovered comet of wrong.

The next time someone tells me that what I’m saying is just obvious, I can point them to your blog and they will beg my pardon.]

Seems like nothing has changed since Mr. Bret Pettichord put forward the Four Schools of Software Testing 10 years ago, except that it may be five now if Test-Driven Design stands on its own. We don’t exchange ideas, but labels

[James’ Reply: Plenty has changed! My school has continued to learn and grow, while the rest of you guys… do whatever it is you do other than studying the craft of testing software. It’s not my fault that you are off on that tangent. And if you think I’m off on a tangent, well, that’s what it means to have different and incommensurable paradigms.

You seem to think that completely different ontologies of practice should be able to routinely exchange ideas. I wonder why you think so? Maybe pick up a book on sociology, once in a while, man.]

Thanks for this elaboration on checking vs. testing. I’ve been trying to use these points to make improvements at work since attending a Michael Bolton workshop in Portland a couple of years ago. This additional info will aid in my arguments that we are far too focused on checking with a criminally small amount of time left for testing.

I must also thank you for introducing me to the Dunning-Krueger effect. It’s a concept with which I’m all too familiar (and must admit, I suffered from a few times) but didn’t know it had a name.

And an interesting observation, Mario G managed to get through a lengthy post and respond to comments without once using James’ name except when he pasted the response from here. He called out Michael Bolton and Cem Kaner by name, yet James is relegated to ‘et al.’ Fascinating.

[James’ Reply: I am He Who Must Not Be Named!

Mario committed a rookie transgression by posting a comment, here. He either knows or should know that his way of thinking is something I oppose to the degree of heaping Voltairian ridicule upon it wherever I encounter it. I think his ideas are sociologically ignorant and scientifically illiterate mumbo jumbo. The most sympathetic thing I can say is that it is a completely different paradigm and he has a right to express his stupid opinions just the same as I have the right to express mine.

But why he would expect me to show him any sort of respect when he wanders over here to pollute my blog is beyond me. It’s as if Dick Cheney were to write a letter to the editor of Mother Jones magazine…

I’ll argue with anyone who seems seriously trying to understand. But I suspect that learning is not his thing.]

My curiosity and some posts below make me think that we might perhaps exchange some ideas.

http://context-driven-testing.com/?p=55

“For the field to advance, we have to be willing to learn from people we disagree with–to accept the possibility that in some ways, they might be right.”

[James’ Reply: The problem is not that you disagree with me. The problem is that you come from a different paradigm. Paradigms cannot be mixed and matched using any simple form of translation. They are incommensurable. In a truly cross-paradigm situation, the communication cannot succeed using any formal means. It requires informal methods of exchange (e.g. a series of shared experiences).

I can disagree with people inside my own paradigm. With people outside, “disagree” is too weak a word. A better way to say it is that I don’t comprehend why you seem to think the way you do. What you say makes little sense. It’s a mystery to me. How do people like you live and reproduce? I don’t know. I suspect that nothing you will say will help.

I have plenty of people around me with whom I can have productive disagreement. I don’t need to make myself crazy trying to convince you to leave behind nearly all of your assumptions and beliefs.]

http://context-driven-testing.com/?p=69

“The distinction between manual and automated is a false dichotomy. We are talking about a matter of degree (how much automation, how much manual), not a distinction of principle.”

[James’ Reply: We *are* actually talking about a distinction of principle, and not one of degree. The sentence you cite is a reference to something else, which is the idea of tool-supported testing (not checking).]

I think this post helps the following:

– good testers understand further subtleties of checks

– testers/developers who had bonafide uses of checks, no longer look bad.

– overall clarity on checks

However….

When I had first read Michael’s post/s on checking/testing, it wasn’t so much a new term, it helped me understand better what is “not testing” or what is “bad testing”. Along with that I liked the definitions/conditions for testing such as “a test must be an honest attempt to find a defect” and “a test is a question which we ask of the software”. To me, these clearly delineated a test/not-a-test.

In this post, I would have liked to restate the overall mission of testing. In the diagram, we should show that bad checks are not part of testing. With the current post one can leave with the impression that bad checks are part of testing. (Also worth keeping in mind that bad checks is rampant – much more than good tests – my opinion).

I think there are some prerequisites for using checks as described here:

1. One needs to understand the overall mission of testing

2. One needs to explicitly define the meaning of testing

on 1:

When teams focus *completely* on automation, it might seem like their checks are part of testing. However, if there is no overall understanding of testing, I am not sure if there is value in checks.

on 2:

Many groups do not have an explicit definition of testing. I am not sure if you would endorse their checks and tests. In this case, I think testing is hit and miss and one can create ‘bad checks’.

[James’ Reply: Good points. Obviously I advocate good testing, not bad testing. One popular kind of bad testing is that which is obsessed with checking to the exclusion of the rest of testing. Even good checking that is overdone amounts to bad testing. But good checking, as you say, requires a good testing process (including an understanding of the mission) in order to pull off]

Love this blog post and it’s subject!

After reading it, a particular scene pops up in my mind:

You all seen the smart little monkey using a stick, probing the anthill to get to the eggs. The stick is a tool and the monkey gain two-fold advantages from using it: avoiding the fearsome counter-attacking ants and extending his range to reach the eggs.

Like a carpenter using a saw…

Like a tester uses automated testing tools…

In my view it’s always a human (or a monkey for that matter ;)) that holds the other end of the stick and decides how to use it for best result. I think it’s sad the software industry generally believe that knowledge about the tool itself is more valuable than the human wisdom of when and how to use it.

Automation testing consumes a lot of energy, time and money to develop and maintain, and often the tools grows into being more important than the reason for using it in the first place. To me that’s a very backward way to optimally solve a problem…

If these purified definitions of checking and testing helps us to straighten out that misconception, I’m for it all the way!

I’m thrilled to see what comes next!

Great article and feedback discussion.

In this comment made:

“Furthermore, strictly speaking you only perform a check when you are worried that you may not know the answer. Otherwise there is no point at all.”

I am not sure what you mean by ‘not know the answer’. Is this referring to not knowing what the expected result should be(would that be called exploratory testing?) or knowing the expected result but not sure what the program under test will return?

[James’ Reply: I mean the latter. You must have some algorithmic method of evaluating the result in order for it to be a check. But if you are 100% confident in the outcome of a check (e.g. if you are completely sure that the right numbers will appear on the report, say), then there is no point in running the check (at least from your point of view). It’s no longer a check, it’s just a demonstration done perhaps for the entertainment of others or for ritualistic/magical purposes, such as to appease management or to perpetrate a fraud.]

I am just thinking about how to define and convey instructions to manual testers using this terminology to help solidify this knowledge in my mind. Perhaps one could refer to a manual test script as a ‘Check Script’ or ‘Check Procedure/Case’ depending on how it is written. Maybe if written only at a logical/test condition level or vague level (not clear steps but clear test objective) it could then be considered more than a ‘human checking script’, maybe it can be called an ‘Exploratory Script’. I would think a ‘Test Charter’ would also be more than human checking.

[James’ Reply: I would call a strict set of checking instructions a “check procedure” unless I felt that “test procedure” was necessary in order to prevent management disorientation.

I would call it a test charter or test procedure if it were written in a form that afforded significant exploratory testing.]

I have at times given out a test requirement that I don’t fully understand and written the objective in a manual test script so I can trace to the requirement and assign it out to a tester to explore this test requirement in the application and write a test script to make important checks repeatable or in other words asked them to do exploratory testing and produce a ‘Check Script’

Ugh. Yours is the mentality of a modern-day Sulla or, to put it in terms you’ll understand, Mohamed Morsi.

I used to like reading your blog, but the fact that you can’t handle when people tell you that you’re not “great” is sickening. Signing off and never looking back.

[James’ Reply: This is surreal. Imagine this: someone you have never heard of takes the trouble to introduce himself, claims to have once liked you and now doesn’t like you, then compares you FAVORABLY to two famous and successful world leaders (I would have preferred Churchill, but I can’t have everything). Wouldn’t that make you feel, actually, pretty great?

Let me tell you, normal people don’t get this treatment!

Dear boy, if you want to deflate my ego you should go about it in a different way.]

Nowadays any application of consequence exists in a wider system that includes the company’s internal network but also the external; the internet has made the external network much more complex than ever before. A complex system involves many different actors or agents interacting, and it’s in the nature of complex systems that they exhibit emergent behaviour. ‘Checking’ usually only involves observing the behaviour of an individual actor in a complex system; while that is important, it doesn’t give us much insight into the behaviour of a wider system or the influence of the individual actor on the emergence of that system’s behaviour. We can probably never get complete information on this, and while we may be contractually only responsible for the behaviour of the individual actor, we have a moral responsibility to gain insights into the behaviour of the wider system as a whole. Data has real consequences for people, and it exists in a complex emergent system; if we only check then we are neglecting that moral responsibility. I think it’s only by experimenting, investigating and evaluating that we gain insight into an individual actor’s effects on a system as a whole, and that, for me, is why checking, whether it’s automated or manual or both, can never be acceptable as a complete approach to testing unless we’re testing an application that’s completely irrelevant and lives in its own controlled world with no interactions at all, in which case I would ask why anyone’s building it.

Two books; ‘The Edge of Chaos’ by Mitchell Waldrop and ‘The Quark and the Jaguar’ by Murray Gell Mann influenced my thinking on this matter and I think anyone who’s satisfied to just ‘check’ should read them and really try to understand the importance of what theese two scientists are saying. Sooner rather than later.

Well, you teach courses, isn’t that what you do?

[James’ Reply: Yes.]

And you teach them to people who think differently or, at least, don’t think like you (otherwise, what need would they have for you to teach them anything). Correct?

[James’ Reply: Yes.]

Thus, for the right amount of money, you teach people even if they have different paradigms.

[James’ Reply: Yes. I value my time. So there are things that don’t get priority unless someone pays me.

However, you may be confusing teaching with “making the student learn.” I can’t make you learn or even listen to me. If you harken from a different paradigm you are likely to sit there thinking “this guy is stark raving mad” or at perhaps simply “he doesn’t understand testing.” I’m sure there are some people who do that, having been sent to my class.